Hao Chen, Mario Valerio Giuffrida, Peter Doerner, Sotirios A. Tsaftaris

CVPPP Workshop (2018)

Hao Chen, Mario Valerio Giuffrida, Peter Doerner, Sotirios A. Tsaftaris (2018) “Root Gap Correction with a Deep Inpainting Model,” Computer Vision Problems in Plant Phenotyping.

Abstract

Imaging roots of growing plants in a non-invasive and affordable fashion has been a long-standing problem in image-assisted plant breeding and phenotyping. One of the most affordable and diffuse approaches is the use of mesocosms, where plants are grown in soil against a glass surface that permits the roots visualization and imaging. However, due to soil and the fact that the plant root is a 2D projection of a 3D object, parts of the root are occluded. As a result, even under perfect root segmentation, the resulting images contain several gaps that may hinder the extraction of finely grained root system architecture traits.

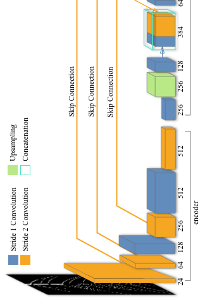

We propose an effective deep neural network to recover gaps from disconnected root segments. We train a fully supervised encoder-decoder deep CNN that, given an image containing gaps as input, generates an inpainted version, recovering the missing parts. Since in real data ground-truth is lacking, we use synthetic root images that we artificially perturb by introducing gaps to train and evaluate our approach. We show that our network can work both in dicot and monocot cases in reducing root gaps. We also show promising exemplary results in real data from chickpea root architectures.