Agisilaos Chartsias, Thomas Joyce, Mario Valerio Giuffrida, Sotirios A. Tsaftaris

IEEE Transactions on Medical Imaging (2017)

Agisilaos Chartsias, Thomas Joyce, Mario Valerio Giuffrida, Sotirios A. Tsaftaris (2017) “Multimodal MR Synthesis via Modality-Invariant Latent Representation,” IEEE Transactions on Medical Imaging.

Abstract

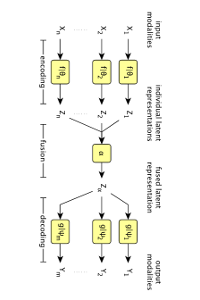

We propose a multi-input multi-output fully convolutional neural network model for MRI synthesis. The model is robust to missing data, as it benefits from, but does not require, additional input modalities. The model is trained end-to-end, and learns to embed all input modalities into a shared modalityinvariant latent space. These latent representations are then combined into a single fused representation, which is transformed into the target output modality with a learnt decoder. We avoid the need for curriculum learning by exploiting the fact that the various input modalities are highly correlated. We also show that by incorporating information from segmentation masks the model can both decrease its error and generate data with synthetic lesions. We evaluate our model on the ISLES and BRATS datasets and demonstrate statistically significant improvements over state-of-the-art methods for single input tasks. This improvement increases further when multiple input modalities are used, demonstrating the benefits of learning a common latent space, again resulting in a statistically significant improvement over the current best method. Lastly, we demonstrate our approach on non skull-stripped brain images, producing a statistically significant improvement over the previous best method. Code is made publicly available at https://github.com/agis85/multimodal brain synthesis.